What is a Data Quality firewall?

A data quality firewall is a key component of data management. It is a form of data quality monitoring, using software to prevent the ingestion of messy or bad data.

It’s a set of measures or processes to ensure the integrity, accuracy, and reliability of data within an organisation, and helps support data governance strategies. This could involve controls and checks to prevent the entry of inaccurate or incomplete data from data sources into data stores, as well as mechanisms to identify and rectify any data quality issues that arise.

In its simplest form, a data quality firewall could be data stewards manually checking the data. However, this isn’t recommended, as it’s considerably inefficient and could cause inaccuracies. Instead, a more effective approach is the use of automation.

An automated approach

Data quality metrics (e.g. completeness, duplication, validity etc.) can be generated automatically and are useful for identifying a data quality issue. At Datactics, with our expertise in AI-augmented data quality, we understand that the most value is derived from data quality rules that are highly specific to an organisation’s context. This includes rules focusing on Accuracy, Consistency, Duplication, and Validity. The ability to execute all the above rules should be a part of any data quality firewall.

The above is perfectly suited to an API giving an on-demand view of the data’s health before ingestion into the warehouse. This real-time assessment ensures that only clean, high-quality data is stored, significantly reducing downstream errors and inefficiencies.

What Features are Required for a Data Quality Firewall?

The ability to define Data Quality Requirements

The ability to specify what data quality means for your organisation is key. For example, you may want to consider whether data should be processed in situ or passed through an API, depending on data volumes and other factors. Here are a couple of other questions worth considering when defining data quality requirements-

- Which rules should be applied to the data? It goes without saying that not all data is the same. Rules which are highly applicable to the specific business context will be more useful than a generic completeness rule, for example. This may involve checking data types, ranges, and formats, or validation against sources of truth. Reject data that doesn’t meet the specified criteria.

- What should be done with broken data? Strategies for dealing with broken data should be flexible. Options might include quarantining the entire dataset, isolating only the problematic records, passing all data with flagged issues, or immediately correcting issues, like removing duplicates or standardising formats. All the above should be options for the user of the API. The point is, not every use case is the same and a one-size-fits-all solution won’t be sufficient.

Key DQ Firewall Features:

Data Enrichment

Data enrichment may involve adding identifiers and codes to the data entering the warehouse. This can help with usability and traceability.

Logging and Auditing

Robust logging and auditing mechanisms should be provided. Log all incoming and outgoing data, errors, and any data quality-related issues. This information can be valuable for troubleshooting and monitoring data quality over time.

Error Handling

A comprehensive error-handling strategy should be provided, with clearly defined error codes and messages to communicate issues with consumers of the API. Guidance on how to resolve or address data quality errors is provided.

Reporting

Regular reporting on data quality metrics and issues, including trend analysis, helps in keeping track of the data quality over time.

Documentation

The API documentation should include information about data quality expectations, supported endpoints, request and response formats, and any specific data quality-related considerations.

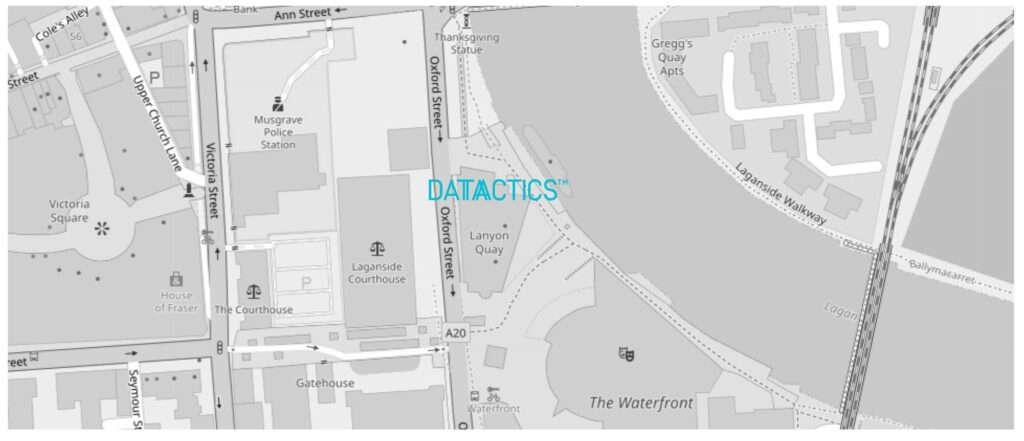

How Datactics can help

You might have noticed that the concept of a Data Quality Firewall is not just limited to data entering an organisation. It’s equally valuable at any point in the data migration process, ensuring quality as data travels within an organisation. Wouldn’t it be nice to know the quality of your data is assured as it flows through your organisation?

Datactics can help with this. Our Augmented Data Quality (ADQ) solution uses AI and machine learning to streamline the process, providing advanced data profiling, outlier detection, and automated rule suggestions. Find out more about our ADQ platform here.